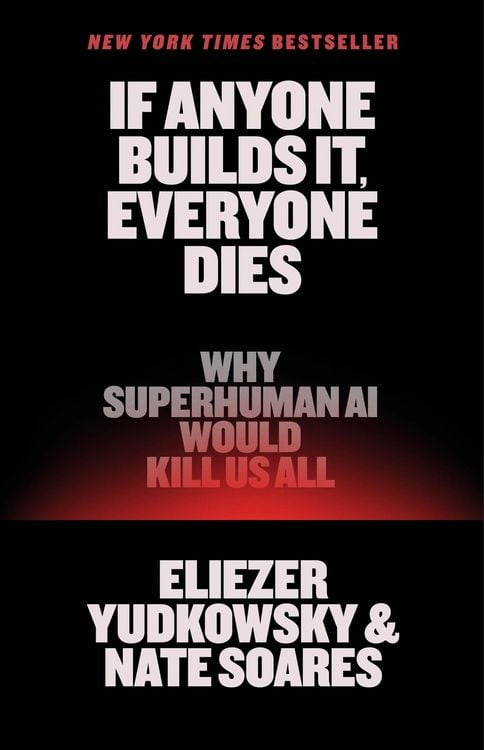

If Anyone Builds It, Everyone Dies Why Superhuman AI Would Kill Us All

2-

- Hardcover ausgewählt

- Taschenbuch

- eBook

-

Sprache:Englisch

-

Verlag:Little Brown USA

- Bodley Head Ltd 27,99 €

- Little Brown USA 24,99 € ausgewählt

24,99 €

UVP

28,00 €

inkl. MwSt,

Lieferung nach Hause

Beschreibung

Details

Verkaufsrang

21734

Einband

Gebundene Ausgabe

Erscheinungsdatum

16.09.2025

Verlag

Little Brown USASeitenzahl

256

Maße (L/B/H)

24/15,8/2,5 cm

Gewicht

463 g

Sprache

Englisch

ISBN

978-0-316-59564-3

The scramble to create superhuman AI has put us on the path to extinction—but it’s not too late to change course, as two of the field’s earliest researchers explain in this clarion call for humanity.

"May prove to be the most important book of our time.”—Tim Urban, Wait But Why

In 2023, hundreds of AI luminaries signed an open letter warning that artificial intelligence poses a serious risk of human extinction. Since then, the AI race has only intensified. Companies and countries are rushing to build machines that will be smarter than any person. And the world is devastatingly unprepared for what would come next.

For decades, two signatories of that letter—Eliezer Yudkowsky and Nate Soares—have studied how smarter-than-human intelligences will think, behave, and pursue their objectives. Their research says that sufficiently smart AIs will develop goals of their own that put them in conflict with us—and that if it comes to conflict, an artificial superintelligence would crush us. The contest wouldn’t even be close.

How could a machine superintelligence wipe out our entire species? Why would it want to? Would it want anything at all? In this urgent book, Yudkowsky and Soares walk through the theory and the evidence, present one possible extinction scenario, and explain what it would take for humanity to survive.

The world is racing to build something truly new under the sun. And if anyone builds it, everyone dies.

“The best no-nonsense, simple explanation of the AI risk problem I've ever read.”—Yishan Wong, Former CEO of Reddit

Unsere Kundinnen und Kunden meinen

I was on the fence about if I should read this book

Bewertung am 03.10.2025

Bewertungsnummer: 2614177

Bewertet: Buch (Gebundene Ausgabe)

Well-written, up-to-date, and highly relevant

Bewertung am 22.09.2025

Bewertungsnummer: 2603765

Bewertet: Buch (Gebundene Ausgabe)

Kurze Frage zu unserer Seite

Vielen Dank für dein Feedback

Wir nutzen dein Feedback, um unsere Produktseiten zu verbessern. Bitte habe Verständnis, dass wir dir keine Rückmeldung geben können. Falls du Kontakt mit uns aufnehmen möchtest, kannst du dich aber gerne an unseren Kund*innenservice wenden.

zum Kundenservice